Our website includes a user login, and the user authentication process was stopping occasionally. Our standard web-monitoring tools could ping the home page and verify the page was responding, but being able to interact with the page was beyond the tool we had in place. We would only learn about a real issue when a customer would alert us to it. This was not acceptable; we had to find a better way.

We had previously developed and executed a series of load tests using a load testing tool that allowed us to run large numbers of users against the testing website doing a number of different actions. But we needed a way to run a single user doing a simple script on a repetitive basis, twenty-four/seven, to alert us to an issue before it impacted our real customers on our production system. Our load testing software could run as a single user to do this test, but it lacked any way to generate an alert when an issue was detected.

Working with our vendor, we discovered they offered a simple solution: Use a different application to run a load test script as a single user in a repeating process and send alerts when something is wrong. We have had this process in place now for three years, and it has been a great solution. Here’s how we did it.

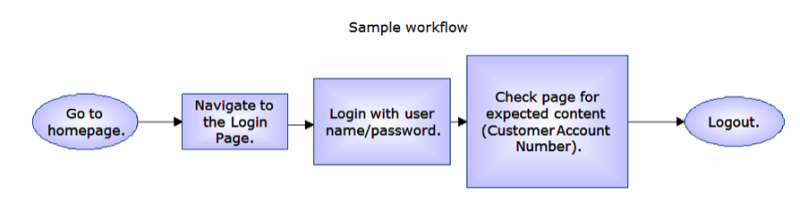

Designing the Test

The first step is to do some business analysis to determine what to test and what a failure looks like. While similar to a load test, this test is focused on both load times for the pages and the result of the script action. You also need the ability to log in to the production system on a repeating basis using a known user/password combination.

The goal for this test is to simply verify the website is active and ready for use. Our tests did not include a transaction (sales order), but you could include this operation; it would just require more work.

These were our checks:

- Each page should load in less than 5,000 ms (five seconds)

- Each page should load correctly

- Each page should pass a text check (verification that the page loaded the expected content)

Coding the Script

Now that you have a design, you create your script.

First, we chose a valid user account that could be used in this process. (It needed to exist in production but be seen as a test account.)

We have also added monitoring of some of our intranet sites used exclusively by authorized users. This authorization is handled using SSL, so for those sites, we had to add some specialized code to support SSL and port mapping.

We created the script using our normal load test scripting design tool, ensuring that any special rules needed by the monitoring tool were included in the design. If you are under support, your support vendor may have some helpful information in this area.

Once you have a script designed and running correctly, now you can move it to the monitoring application.

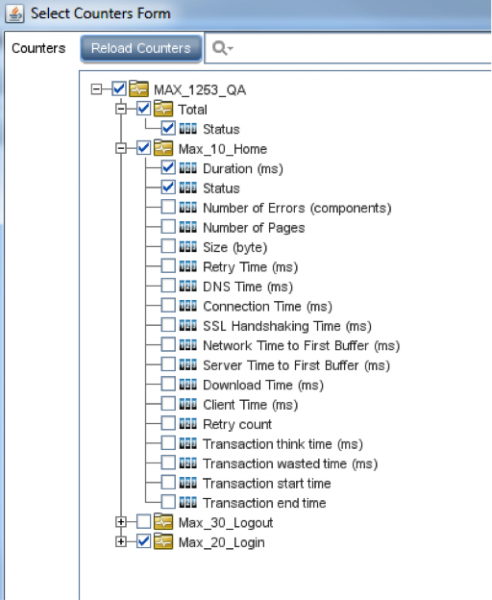

Building the Monitor

Check with your vendor about what elements from the script need to come over, as in some cases you need all runtime files and not just the script. The good news is these files are small.

When creating your monitor, first determine what web elements to track. Depending on your solution, each element you select to monitor can use a portion of your license capacity, so you may choose to limit the number of elements to monitor. In our case, we only wanted to monitor the page load result and how long it took to load, but you may choose some other elements as well, such as any of the below.

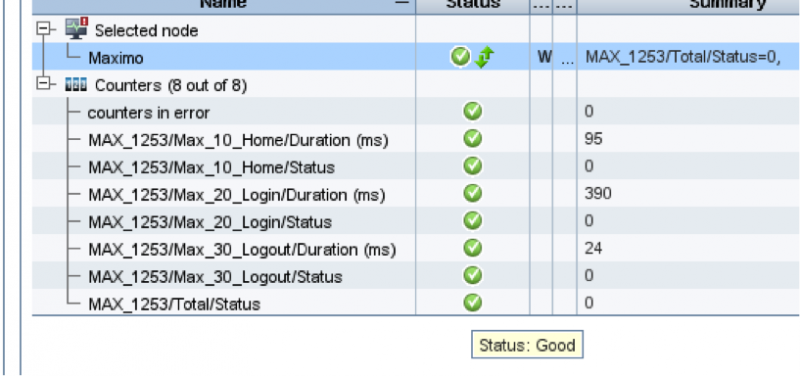

After you save the monitor, you can see the results of the initial creation on the monitor dashboard.

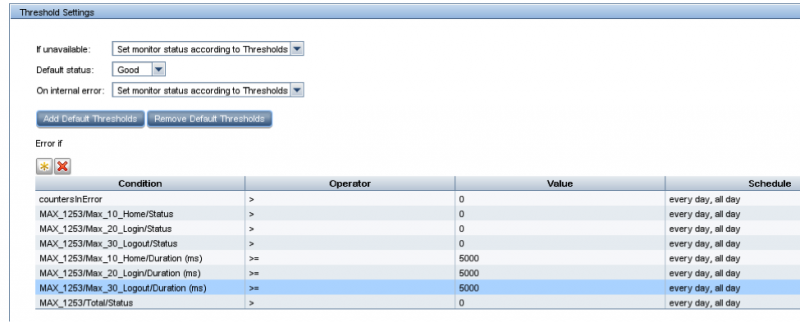

Creating Monitor Rules

Once you have the monitor built, you can create rules for what the system uses to determine a pass or fail condition. We chose to only monitor page status and load time duration.

After you have your criteria set for a pass/fail condition, the dashboard reflects the status.

You also need to decide how often you want the test to run. You can get can false alerts if a second test tries to start while the first test is still running, so we allow three minutes between each test.

Creating Alert Rules

Now that you have the tests set up and defined what a failure is, you need to set up the rules for whom to tell when a possible failure is detected.

Our tool allows more than ten different alert actions, but we chose email for our alerting process. We use a distinct email subject that reflects the website with the issue. We can send either an email or a text message, if the cell carrier supports an email address—[email protected], for example.

One of the things to recognize is the technology is fallible, and occasionally things break that are not on your website. To reduce the number of false alarms, we do not generate an alert unless the test fails three times in a row. We also established rules to only generate a repeat email once an hour (every twenty times), as when the ops team is dealing with an issue, they don’t need a bunch of emails telling them about an issue they are already addressing.

We can also go in and disable the alert as needed to prevent planned downtimes from generating false email alerts.

Generating Reports

These monitoring tools provide a nice way to examine performance over time and generate useful reports. These can be cut and pasted or exported as HTML. (I find it easier to cut and paste the relevant information rather than trying to explain all the data the report can generate.)

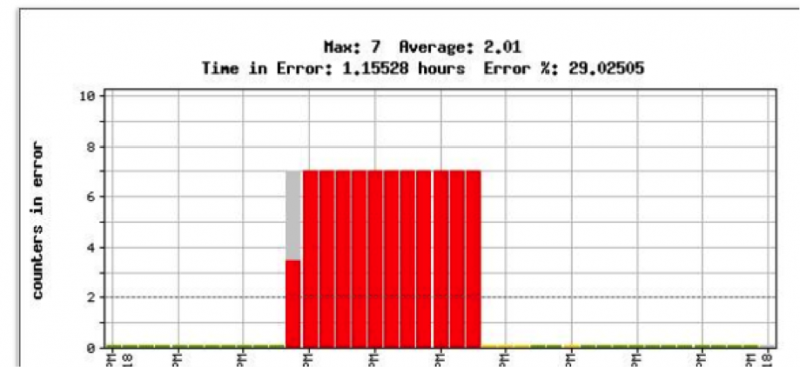

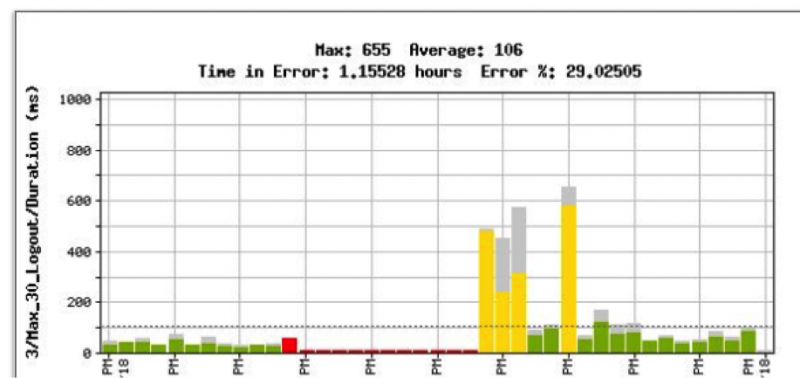

These are sample reports from our QA instance, of the website being down and changes in time needed for the login process:

Maintaining Reliability

Our tool also includes a dashboard view that provides a single place to quickly check all monitors and see if there is an issue with one or more of them that may indicate a bigger problem.

Initially, this tool was a bit of a hard sell to the ops team, as the idea of testing using a virtual user was a new concept to some. But now that it runs like a silent sentry, never sleeping or taking a vacation, we actually have new applications coming to us asking us to monitor their site to ensure customer reliability is maintained.

This has improved the reliability of our operation—and isn’t that the role of QA?

User Comments

I have been beating the drum for testing in production for several years, and have been dismissed by those testers who don't understand the nature of the Web or the cloud. It's gratifying to see others begin to recognize the value here. Testing doesn't end when an application goes into production, especially in the era of Agile and DevOps.

Now to convince leadership of this

many thanks for this article, we need more.

I have been talking about testing in production, reusing test assets for production level testing for years. Its great to see others have fine accomplishments.