DevOps is not only about developing and releasing applications; testing is also an essential part of the practice. But the role of testing in DevOps is not as visible as the other practices, and there are often disputes about how testing should be performed.

Due to the increasingly automated nature of the software delivery lifecycle in DevOps, continuous testing has become a popular movement. However, test automation gives many testers pause. Though automation can be helpful, particularly in a DevOps environment, it cannot (and should not) replace testers entirely.

In particular, the context-driven testing community emphasizes that testing and automated checking are different and that validating expected results is not testing. Through exploring and experimenting, testers play a crucial role in ensuring the quality of software products.

However, by insisting that real testing activities cannot be automated at all, testers are left out of the continuous testing conversation. That introduces the risk that important improvements in test automation will be shaped more by software engineers than by the testing community. There is a need to find an adequate response to the demand to orient testing toward an accelerating pace of development, with shorter implementation and release cycles. The question is not whether testing will change, but rather who will drive the innovations in testing.

A Case for Shifting Right

You’ve probably heard of the “shift left” movement in software testing, describing the trend toward teams starting testing as early as possible, testing often throughout the lifecycle, and working on problem prevention instead of detection. The goals are to increase quality, shorten test cycles, and reduce the possibility of unpleasant surprises at the end of the development cycle or in production.

But there’s another new idea: shift right. In this approach, testing moves right into production in regard to functionality, performance, failure tolerance, and user experience by employing controlled experiments. By waiting to test in production, you may find new and unexpected usage scenarios.

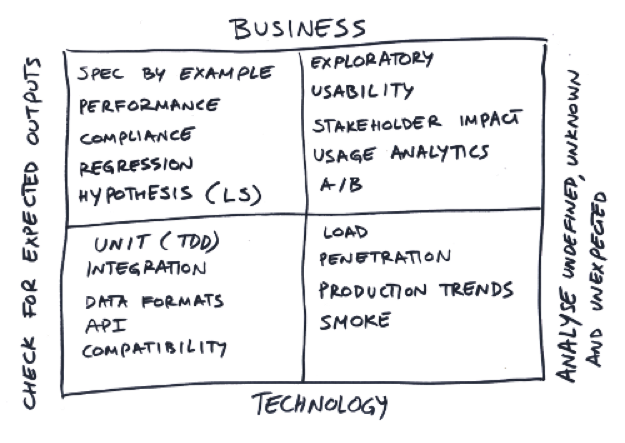

The popular test strategy model of the four classifies tests based on whether they are business- or technology-facing and if they more guide development or critique the product. However, Gojko Adzic observed that "with shorter iterations and continuous delivery, it’s difficult to draw the line between activities that support the team and those that critique the product." As an alternative, he suggested using the distinction of whether you’re checking for expected outputs or looking to analyze the undefined, unknown, and unexpected:

Let’s look at some perhaps unfamiliar testing approaches that focus on the unknown and unexpected.

Finding New Ways to Test

Testing web services with live production traffic

The goal of testing is to ensure that the software product works for the consumer. Therefore, the best input for tests is the actual production traffic. This does not necessarily mean that the testing has to be done in production; by capturing, duplicating, and redirecting live production traffic to test environments, it is possible to use real-time production API calls as test input. By using live production traffic to test the new version of a web service prior to its release in production, it is possible to determine if unpredictable changes in API usage cause unexpected behavior, such as slower response times or deviations in the CPU consumption.

Twitter uses an automated testing tool called Diffy that finds bugs by acting as a proxy, receiving HTTP requests and directing them to an instance of the new version and to the production version, side by side. It then compares the responses and reports any regressions. Following this approach, API calls from production can be redirected to two product variations and an automated check can detect regression in their responses. For example, for an e-commerce search service, the tool could detect variations in the number of results and their ranking. It cannot determine if end-users would sense that the results are correct, as could be done by exploratory testing or by taking actual user feedback into account. But with the possibility to compare many samples based on unpredictable production requests, this automated check is a good basis to detect deviations and, thus, provide input for further manual analysis.

A/B testing

Compared to the previous approach, A/B testing goes one step farther because it is based on feedback from real users. It compares two versions of a product hosted in production. You split your website production traffic between the two versions to measure metrics such as the conversion rate. A/B testing is done in production by real customers, providing a way of critiquing the product.

Canary release

The canary release strategy is used to reduce the risk of introducing a new software version in production. This is done by slowly rolling out the change to a small subset of instances before applying it to the entire infrastructure.

The difference between a canary release and A/B testing is that A/B testing is for measuring functionality in the application, while the canary release does not look at user feedback. The canary release, as well as other release strategies such as the staged release, is about releasing new software safely, preferably detecting failures automatically and rolling back predictably.

Testing in production

Why would you test in production and not only in dedicated test environments? Testing in production makes it possible to draw from real users and analyze use cases that are difficult to anticipate. In the end, the only way to prove that software is ready for production is to release it in production.

The traditional approach is to execute as many tests as possible prior to the release until confidence is reached that the product is robust and will not fail. For DevOps organizations, proper testing is also crucial, but they pay particular attention to the ability to fail small and fast if a failure happens in production. This can be achieved by deploying only small changes and releasing incrementally while monitoring closely that the application is healthy. It is important to be able to react quickly through automation if a failure happens. Therefore, released does not mean done; instead, there is the need to review and monitor.

Monitoring can actually also rely on automated checks. Semantic monitoring uses actual tests to continuously evaluate the application in production. This approach can be useful to test the interaction of microservices at run-time, for example. There should be no clear distinction between monitoring and testing. Production tests interact with a live production system, as opposed to a controlled, predictable environment, so they are essential to ensuring a reliable production service.

Fault tolerance testing

Chaos Monkey is a tool run in Amazon Web Services that intentionally creates production failures and determines if the system under test is still able to operate under those failure conditions. Failure will happen, so why not test possible failures in production yourself ahead of time? By failing quickly and inexpensively in a controlled experiment, the developers and system engineers are guided toward creating a system that is more robust and can cope with failures.

Shaping the Future of Automation

These examples cannot give a comprehensive overview of automated testing in DevOps, but they show that more than just simple regression checking can be automated. Automation can also help analyze the undefined, unknown, and unexpected by relying on real production traffic and unpredictable test input. With shorter implementation and release cycles, testing and production come closer together. The testing community should not ignore such developments, but instead should see the opportunities to play an important role in helping drive test automation forward.

User Comments

Excellent Stefan! I see some value in them. I understand that certain risks would also manifest themselves when testing in production, but still the idea is to foresee them and reduce their impact as early as possible and what better way to explore them than by testing in production.

Thank you for the great article Stefan! What if you could test production in development? I think about your shift right - when I present, I talk about shifting completely left - maybe it forms a complete circle:) We use bots and our continuous dynamic field simulation framework to run completely realistic production activity at scale against entire tech stacks in development. Sometimes we even use metrics from production activities for bot goal seeking. We instrument the systems completely so everyone can see everything and test-fail-fix before any customer pain. We are having a lot of success from clients ranging from SaaS IoT (invehicle device, TV settops) to mobile apps and web systems. There is just so much value to be found in minding the gap.

Hi Myrna,

The boundaries between development, testing and releasing/operating gets blurred so you could argue which trends are towards development and which towards production. To test with bots is a great idea - what we are doing is to to some extent comparable: we use production traffic as test input but duplicating production API calls as use them as test input (for performance tests) - see also: http://tinyurl.com/zfhlzem