The test pyramid is a great model for designing your test portfolio. However, the bottom tends to fall out when you shift from progression testing to regression testing. The tests start failing, eroding the number of working unit tests at the base of your pyramid. If you don't have the development resources required for continuous unit test maintenance, there are still things you can do.

The test pyramid is the ideal model for agile teams to use when designing their test portfolio. Unit tests form a solid foundation for understanding whether new code is working correctly:

- They cover code easily: The developer who wrote the code is uniquely qualified to verify that their tests cover their code. It’s easy for the responsible developer to understand what’s not yet covered and create test methods that fill the gaps.

- They are fast and cheap: Unit tests can be written quickly, execute in seconds, and require only simple test harnesses (versus the more extensive test environments needed for system tests).

- They are definitive: When a unit test fails, it’s relatively easy to identify what code must be reviewed and fixed. It’s like looking for a needle in a handful of hay versus trying to find a needle in a heaping haystack.

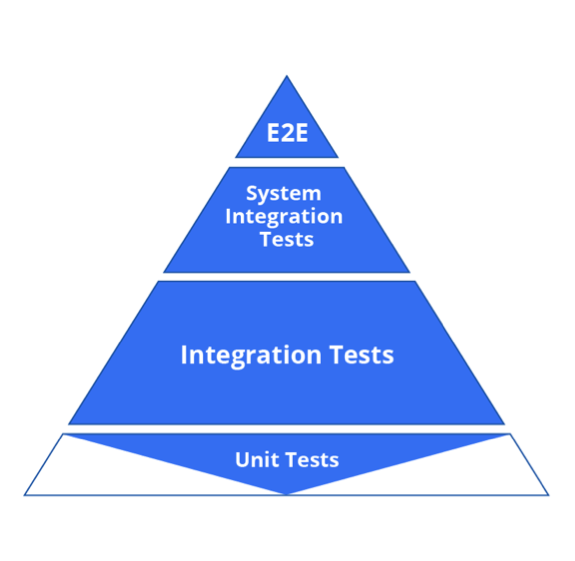

However, there’s a problem with this model: The bottom falls out when you shift from progression testing (checking that the newly added functionality works correctly) to regression testing (checking that this functionality isn’t impacted by future changes). Your test pyramid often becomes a diamond:

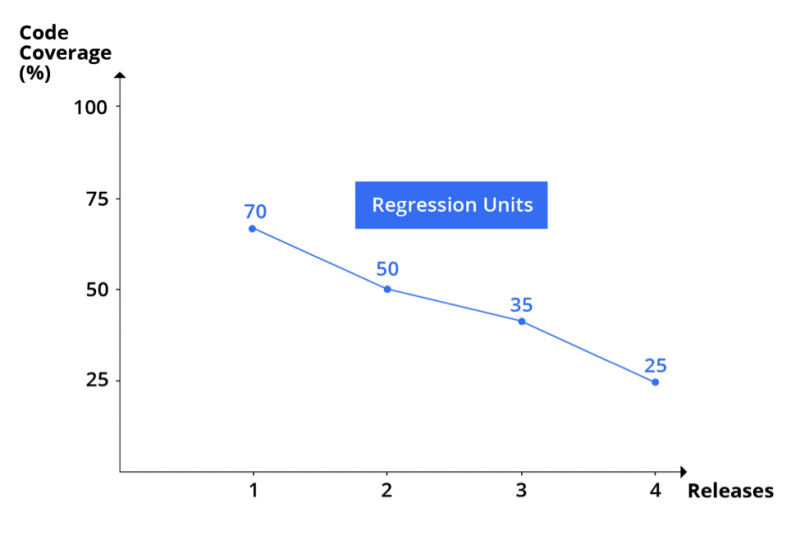

At least, that’s what surfaced in the data we recently collected when examining unit testing practices across mature agile teams. In each sprint, developers are religious about writing the tests required to validate each user story. Typically, it’s unavoidable: Passing unit tests are a key part of the definition of done. By the end of most sprints, there’s a solid base of new unit tests that are critical in determining if the new code is implemented correctly and meets expectations. Our data says these tests typically cover approximately 70 percent of the new code.

From the next sprint on, these tests become regression tests. In many agile approaches, little by little, they start failing—eroding the number of working unit tests at the base of the test pyramid, as well as the level of confidence the test suite once provided.

After a few iterations, the same unit tests that once achieved 70 percent coverage provide only about 50 percent coverage of that original functionality. Our data says this drops to 35 percent after several more iterations, and it typically degrades to 25 percent after six months.

This subtle erosion can be dangerous if you’re fearlessly changing code, expecting your unit tests to serve as a safety net.

Why Unit Tests Erode

Unit tests erode for a number of reasons. Even though unit tests are theoretically more stable than other types of tests, such as UI tests, they too will inevitably start failing over time.

Code gets extended, refactored, and repaired as the application evolves. In many cases, the implementation changes are significant enough to warrant unit test updates. Other times, the code changes expose the fact that the original test methods and test harness were too tightly coupled to the technical implementation—again, requiring unit test updates.

However, those updates aren’t always made. After developers check in the tests for a new user story, they’re under pressure to pick up and complete another user story. And another. And another. Each of those new user stories need passing unit tests to be considered done—but what happens if the old user stories start failing?

Usually, nothing. The failures get ignored, or—if all tests must pass to clear a CI/CD quality gate—the offending tests get disabled. Since the developer who wrote that code will have moved on, appropriately resolving the failures would require them to get reacquainted with the long-forgotten code, diagnose why the test is failing, and figure out how to fix it. This isn’t trivial, and it can disrupt progress on the current sprint.

Frankly, unit test maintenance often presents a burden that many developers resent. Just scan Stack Overflow and similar communities to read developer frustrations related to unit test maintenance.

How to Stabilize the Erosion

I know that some exceptional organizations require unit test upkeep and even allocate appropriate resources for it. However, these tend to be organizations with the luxury of SDETs and other development resources dedicated to testing. Many enterprises are already struggling to deliver the volume and scope of software that the business expects, and they simply can’t afford to shift development resources to additional testing.

If your organization lacks the development resources required for continuous unit test maintenance, what can you do?

One option is to have testers compensate for the lost coverage through resilient tests that they can create and control. Professional testers recognize that designing and maintaining tests is their primary job and that they are ultimately evaluated by the success and effectiveness of the test suite. Let’s be honest—who’s more likely to keep tests current: the developers who are pressured to deliver more code faster, or the testers who are rewarded for finding major issues (or blamed for overlooking them)?

In the most successful organizations we studied, testers offset the risk of eroding unit tests by adding integration-level tests, primarily at the API level, when feasible. This enables them to restore the degrading “change-detection safety net” without disrupting developers’ progress on the current sprint.

User Comments

Many people in the field (including me) feel that for a complex system, integration tests are far more important than unit tests. (E.g., see this article.) Also, unit tests create a huge impedance against change: you might want to update something, but to do so you need to update a whole bunch of tests, so you don't update. That's not Agile. IMO, unit tests impede Agile.

Testing exists in order to manage risk. Risk can come from (1) bugs introduced when code is written, or (2) bugs introduced when code is changed. Unit tests are good at helping with both, but for #2, it depends on the language. I have refactored very large code bases in Java and Go and introduced zero bugs as a result - zero - none detected by unit tests or integration tests. Once it all compiled, it worked. I have also refactored code in Ruby, and lots - lots - of bugs were detected by the unit tests. Thus, while unit tests are essential for being able to safely modify Ruby code, they are completely unnecessary to safely modify Java or Go. So the language is a key factor in deciding if you even need unit tests anymore once the code has been written.

Integration tests are usually the big gap in complex systems. Many organizations claim to do "devops" but they only do it at a component level - they still use waterfall practices for integration. Having automated integration tests, and measuring their coverage, is essential for complex systems. It is far more essential than unit tests, and unlike unit tests, integration tests do not impede Agile, because they are black box tests and are not affected by changes to internal contracts or behavior.

Contracts between components are essential. One should also do component level testing, to verify componnt contracts. That is not unit testing thgough (even if you use JUnit to run the tests) - unit tests are at the internal method level.

Thank you SO MUCH for this article on the pyramid of agility testing! Really insightful stuff. Thanks again for taking the time to share.

Yes, a good articulation of the reality that most development/engineering teams face. It is extremely hard and counter to our tendency to close stories due to severe pressure to deliver.

However, why is this issue only with maintenance of unit tests. Even functional/integration tests will deteriorate over time due to the same pressure. If a team can ignore unit test failures, they can also ignore integration/functional test failures. I am curious to know if this is not the case.

thanks,

venky

Agree, but a very effective way to prevent the integration tests from degrading is to align them with acceptance criteria, and require that they all pass for an Agile story to be "done". To be "done", not only should the component's tests pass, but any integration tests that were broken as a result should be made to pass. If you define your DOD that way, the acceptance tests will be maintained.

The role of unit tests is much less, now that integration testing can be shifted left to local or on-demand test environments. Unit tests tend to be brittle, unless one goes to great lengths to engineer them, which taxes the overall effort. They are brittle because they are white box tests, in that they reflect the internal structure - private methods - of a component.

There are essentially two value propositions for unit tests: (1) support a TDD process, and (2) provide a safety net against change. Both these value propositions are questionable, however. Not everyone uses a TDD process, because it is an inductive process, and many people think reductively. As a safety net, if one uses a typesafe language, one can refactor with confidence - unit tests do not help unless one is uses a type-unsafe language. I have personallay refactored very large codebases in Go and Java and introduced zero new errors, whereas I would not even attempt to refactor a Ruby or Python project unless it had a comprehensive unit test suite.

Thus, unit tests are, IMO, a tax that one must pay if one uses a type unsafe language.

For those interested in the tradeoffs of the TDD process, I recommend these highly informative debates between Kent Beck and DHH: https://martinfowler.com/articles/is-tdd-dead/

Yes, the pyramid is better seen as a diamond. For a complex system, integration issues are where the problems are. The loose coupling and distributed nature of today's systems has resulted in most issues being integration issues. That is where your automated tests should focus.

Here is a great article about the changing focus toward integration tests: https://kentcdodds.com/blog/write-tests/