This article also appeared in the May/June 2012 issue of Better Software magazine.

A manager took me aside at a recent engagement. “You know, Johanna, there’s something I just don’t understand about this agile thing. It sure doesn’t look like everyone is being used at 100 percent.”

“And what if they aren’t being used at 100 percent? Is that a problem for you?”

“Heck, yes. I’m paying their salaries! I want to know I’m getting their full value for what I’m paying them!”

“What if I told you you were probably getting more value than what you were paying, maybe one and a half to twice as much? Would you be happy with that?”

The Manager calmed down, then turned to me and said, “How do you know?”

I smiled, and said, “That’s a different conversation.”

Too many managers believe in the myth of 100 percent utilization. That’s the belief that every single technical person must be fully utilized every single minute of every single day. The problem with this myth is that there is no time for innovation, no time for serendipitous thinking, no time for exploration.

And, worse, there’s gridlock. With 100 percent utilization, the very people you need on one project are already partially committed on another project. You can’t get together for a meeting. You can’t have a phone call. You can’t even respond to email in a reasonable time. Why? Because you’re late responding to the other interrupts.

How Did We Get Here?

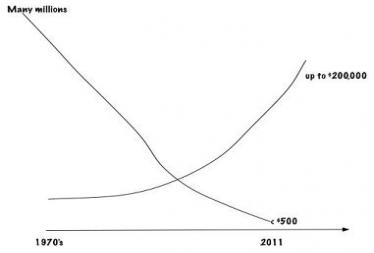

Back in the early days of computing, machines were orders of magnitude more expensive than programmers. In the 1970s, when I started working as a developer, companies could pay highly experienced programmers about $50,000 per year. You could pay those of us just out of school less than $15,000 per year, and we thought we were making huge sums of money. In contrast, companies either rented machines for many multiples of tens of thousands of dollars per year or bought them for millions. You can see that the scales of salaries to machine cost are not even close to equivalent.

When computers were that expensive, we utilized every second of machine time. We signed up for computer time. We desk-checked our work. We held design reviews and code reviews. We received minutes of computer time—yes, our jobs were often restricted to a minute of CPU time. If you wanted more time, you signed up for after-hours time, such as 2 a.m. to 4 a.m.

Realize that computer time was not the only expensive part of computing. Memory was expensive. Back in these old days, we had 256 bytes of memory and programmed in assembly language code. We had one page of code. If you had a routine that was longer than one page, you branched at the end of a page to another page that had room that you had to swap in. (Yes, often by hand. And, no, I am not nostalgic for the old days at all!)

In the late '70s and the ‘80s, minicomputers helped bring the money scales of pay and computer price closer. But it wasn't until minicomputers really came down in price and PCs started to dominate the market that the price of a developer became so much more expensive than the price of a computer. By then, many people thought it was cheaper for a developer to spend time one-on-one with the computer, not in design reviews or in code reviews, or discussing the architecture with others.

In the '90s, even as the prices of computers, disks, and memory fell, and as programmers and testers became more expensive, it was clear to some of us that software development was more collaborative than just a developer one on one with his computer. That's why Watts Humphrey and the Software Engineering Institute gained such traction during the '90s. Not because people liked heavyweight processes, but because, especially with a serial lifecycle, you had to do something to make system development more successful. And, many managers were stuck in 100 percent utilization thinking. Remember, it hadn't been that long since 100 percent utilization meant something significant.

Now, remember what it means when a computer is fully utilized and it’s a single-process machine: It can do only one thing at a time. It can’t service any interrupts. It can’t respond to any keystrokes. It can’t update its status. It can only keep processing until it’s done.

Now, if the program is well behaved, and is not I/O bound, or memory bound, or CPU bound, and is a single-user, single-process machine, such as a personal calculator that only adds, subtracts, multiplies, and divides, that’s probably fine. But as soon as you add another user to the mix, or another process, you are in trouble. (Or, if the program is not well behaved and does not finish properly, you are in trouble.)

And that’s what we have with modern computers. Modern computers are multi-process machines.

With multi-process machines, if a computer is fully utilized, you have thrashing, and potential gridlock. Think of a highway at rush hour with no one moving; that's a highway at 100 percent utilization. We don't want highways at 100 percent utilization. We don't want current computers at 100 percent utilization either. If your computer gets to about 50 to 75 percent utilization, it feels slow. And, computers utilized at higher than 85 percent have unpredictable performance. Their throughput is unpredictable, and you can’t tell what’s going to happen.

Unfortunately, that’s precisely the same problem for people.

Why 100% Utilization Doesn't Work for People

Now, think of a human being. When we are at 100 percent utilization, we have no slack time at all. We run from one task or interrupt to another, not thinking. There are at least two things wrong with this picture: the inevitable multitasking and the not thinking.

We don't actually multitask at all; we fast-switch. And we are not like computers that, when they switch, write a perfect copy of what's in memory to disk and are able to read that back in again when it's time to swap that back in. Because we are human, we are unable to perfectly write out what's in our memory, and we imperfectly swap back in. So, there is a context switch cost in the swapping, because we have to remember what we were thinking of when we swapped out. And that takes time.

So, there is a context switch in the time it takes us to swap out and swap back in. All of that time and imperfection adds up. And, because we are human, we do not perfectly allocate our time first to one task and then to another. If we have three tasks, we don’t allocate 33 percent to each; we spend as much time as we please on each—assuming we are spending 33 percent on each.

Now, let me address the not-thinking part of 100 percent utilization. What if you want people to consider working in a new way? If you have them working at 100 percent utilization, will they? Not a chance. They can't consider it; they have no time.

So you get people performing their jobs by rote, servicing their interrupts in the best way they know how, doing as little as possible, doing enough to get by. They are not thinking of ways to improve. They are not thinking ways to help others. They are not thinking of ways to innovate. They are thinking, "How the heck can I get out from under this mountain of work?" It's horrible for them, for the product, and for everyone they encounter.

When you ask people to work at 100 percent utilization, you get much less work out of them than when you plan for them to perform roughly six hours of technical work a day. People need time to read email, go to the occasional meeting, take bio breaks, have spirited discussions about the architecture or the coffee or something else. But if you plan for a good chunk of work in the morning and a couple of good chunks of work in the afternoon and keep the meetings to a minimum, technical people have done their fair share of work.

If you work in a meeting-happy organization, you can't plan on six hours of technical work; you have to plan on less. You're wasting people's time with meetings.

But no matter what, if you plan on 100 percent utilization, you get much less done in the organization, you create a terrible environment for work, and, you create an environment of no innovation. That doesn’t sound like a recipe for success does it?

Agile and Lean Make the Myth Transparent

Agile and lean don’t make 100 percent utilization go away; they make the myth transparent. By making sure that all the work goes into a backlog, they help management and the teams see what everyone is supposed to be working on and how impossible that is. That’s the good news.

Once everyone can visualize the work, you can decide what to do about it. Maybe some of the work is really part of a roadmap, not part of this iteration’s work. Maybe some of the work is part of another project that should be postponed for another iteration. That’s great—that’s managing the project portfolio. Maybe some of the work should be done by someone, but not by this team. That’s great—that’s an impediment that a manager of some stripe needs to manage.

No matter what you do, you can’t do anything until you see the work. As long as you visualize the work in its entirety, you can manage it.

Remember, no one can do anything if you are 100 percent utilized. If you want to provide full value for your organization, you need to be “utilized” at about 50 to 60 percent. Because a mind, any mind, is a terrible thing to waste.

Read more of Johanna's management myth columns here:

- The Myth of 100% Utilization

- Only the 'Expert' Can Perform This Work

- We Must Treat Everyone the Same Way

- I Don't Need One-on-ones

- We Must Have an Objective Ranking System

- I Can Save Everyone

- I Am Too Valuable to Take a Vacation

- I Can Still Do Significant Technical Work

- We Have No Time for Training

- I Can Measure the Work by the Time People Spend at Work

- The Team Needs a Cheerleader!

- I Must Promote the Best Technical Person to Be a Manager

- I Must Never Admit My Mistakes

- I Must Always Have a Solution to the Problem

- I Know How Long the Work Should Take

- I Must Solve the Team’s Problem for Them

- I Can Move People Like Chess Pieces

- Management Doesn’t Look Difficult From the Outside, So It Must Be Easy

- I Can Compare Teams (and It’s Valuable to Do So)

- It’s Always Cheaper to Hire People Where the Wages Are Less Expensive

- If You’re Not Typing, You’re Not Working

- You Can Manage Any Number of People as a Manager

- People Don’t Need External Credit

- Performance Reviews Are Usefult

- It's Fine to Micromanage

- We Can Take Hiring Shortcuts

- I Can Standardize How Other People Work

- I Can Concentrate on the Run

- I Am More Valuable than Other People

- I Don’t Have to Make the Difficult Choices

- I Can Treat People as Interchangeable Resources

- We Need a Quick Fix or a Silver Bullet

- You're Empowered Because I Say You Are

- Friendly Competition Is Constructive

- You Have an Indispensable Employee

User Comments

Great points Johanna.

Most places I've seen target the 6 hours (75%) target. Although I'd have to agree that 50-60% is a better target for most development teams and could vary slightly by developer.

You touched allowing time for thinking to occur in the day to day cycle. I think you must also allocate time for research to happen. In order for development teams to continue to innovate and provide alternative solutions to ever challenging problems, they must have ample time to research new technologies.

I like to tell my teams to focus on three initiatives:

1. Coding

2. Thinking

3. Research

Great points Johanna.

Most places I've seen target the 6 hours (75%) target. Although I'd have to agree that 50-60% is a better target for most development teams and could vary slightly by developer.

You touched allowing time for thinking to occur in the day to day cycle. I think you must also allocate time for research to happen. In order for development teams to continue to innovate and provide alternative solutions to ever challenging problems, they must have ample time to research new technologies.

I like to tell my teams to focus on three initiatives:

1. Coding

2. Thinking

3. Research

Great points Johanna.

Most places I've seen target the 6 hours (75%) target. Although I'd have to agree that 50-60% is a better target for most development teams and could vary slightly by developer.

You touched allowing time for thinking to occur in the day to day cycle. I think you must also allocate time for research to happen. In order for development teams to continue to innovate and provide alternative solutions to ever challenging problems, they must have ample time to research new technologies.

I like to tell my teams to focus on three initiatives:

1. Coding

2. Thinking

3. Research

Great points Johanna.

Most places I've seen target the 6 hours (75%) target. Although I'd have to agree that 50-60% is a better target for most development teams and could vary slightly by developer.

You touched allowing time for thinking to occur in the day to day cycle. I think you must also allocate time for research to happen. In order for development teams to continue to innovate and provide alternative solutions to ever challenging problems, they must have ample time to research new technologies.

I like to tell my teams to focus on three initiatives:

1. Coding

2. Thinking

3. Research

I like the way you separate research from thinking, Mike. Good idea! Make it transparent...

I like the way you separate research from thinking, Mike. Good idea! Make it transparent...

I like the way you separate research from thinking, Mike. Good idea! Make it transparent...

I like the way you separate research from thinking, Mike. Good idea! Make it transparent...

I love the myth of 100% utilization and I have seen it just about every place I have worked.

Doug, thank you. Yes. I have seen it also, almost everywhere.

Part of the myth is that we, the workers, think we can work long hours and that's okay. I don't know about your university experience, but mine was full of people who said, "I can solve any problem inside a semester with a sufficient number of all-nighters." In a sense, we are trained to think this way.

I first stopped thinking this way when I took summer school classes (at university). I had one class at a time for 2-, 3- or 4-weeks. I found I was able to focus on one thing for the entire time and finish it. I was much more productive. I suspect many people have not had my experience.

Pages